This group uses a chatbot/discord relay persistent notfication

tracked

Beq Janus

There has been an increase in the number of inworld chat groups that utilise a bot account to relay chat from the group to a channel on a discord server and vice versa. Used well this engages people who are not inworld in the community discussions and allows increased participation.

However, this means that your chat is being sent out of SL to a remote server. There is no opt-in for this, if a group decides to do it they might send a notice (which will banish in 14 days), they might update the group covenant (for which there is no notification of change), or they might do nothing at all. you are then happily chatting to the 5 or 6 people in the group in SL, when suddenly a person who is not online and who may not even be in the group membership list, starts to participate.

I would like to see the ability for the group owners/admins to set a persistent flag on the group that indicates that a relay is/may be used. This is akin to the "This meeting is being recorded" notification in zoom or teams and is almost certainly a legal requirement under either GDPR or the DSA. It would allow people to see immediately that their chat is not as private as they thought and make an informed choice about their data.

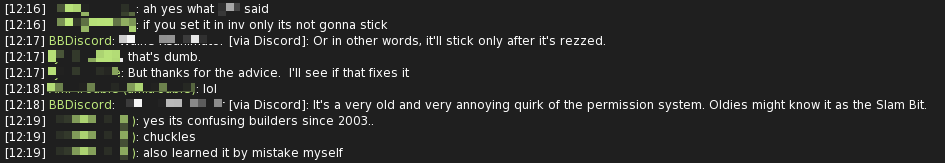

An example of such a bot being made very legitimate and positive use of is in the Builders Brewery group.

Assuming canny supports markdown images (attached as well just in case)!

Notably there is nothing in the covenant or elsewhere to tell you that the group is relayed.

What I would like is to be able to see something like this...

This could simply be a property on the group that implied that recording might be used. It only needs to make people aware that they should ask more questions about their data.

It could, of course, be a more dynamic notifier that turns on when an identified account UUID is active in the group.

If we're adding new properties to chat groups...see also my forthcoming Canny entitled "Persistent topics for group chats please"

Log In

Artesia Heartsong

Nothing stops you to already put a flag in your group description. But it will never stop people joining a group with an (unregistered scripted agent) bot with as purpose to capture all group chat.

Spidey Linden

marked this post as

tracked

Issue tracked. We have no estimate when it may be implemented. Please see future updates here.

SweetShaylie Resident

I'm not going to go into what is legal and not, since I don't know international law to a degree where I would actually be able to contribute, but at least having a flag in the group allowing people to see that the group chat is shared outside of SL would definitely be good, so people know if they want to partake in that group chat or not.

That being said, I actually do like being able to see group chat messages relayed outside of SL in some cases, since I often help people in SL from my discord account on my phone when they ask for support in some groups I'm a member of. It's a great tool for giving support to people while being away from the computer.

SpiritSparrow Skydancer

I rarely use BB anymore because of this issue. If I see any Discord header talking, I close out that chat and sometimes leave the group. I will never understand why they do this, why not just send the messages from SL? I really do not get it.

Zandrae Nova

SpiritSparrow Skydancer From the standpoint of a moderator, I can see why this might be attractive. SL groups don't seem save chat history. Stuff in Discord does.

This allows a group owner/moderator to monitor chat all the time, or to look back at what was said while they were doing work, uni, or sleeping.

This lets people respond to customer inquiries and also remove trolls and problem players that they might not be otherwise aware of.

Extrude Ragu

It's against TOS to share private messages with other users inside or outside of the platform. Surely then by these discord bots sharing group chat, something that is intended for group members only, are breaking TOS? I had actually considered setting up a bot for this in the past but reached the conclusion it would probably be breaking TOS myself.

BillyFishCake Resident

Extrude Ragu I usually don't jump in on discussions about this stuff. But I totally hate how everyone says "It's against TOS to share private messages" but never actually read the TOS themselves. Here is what it says and can be found here -> https://wiki.secondlife.com/wiki/Linden_Lab_Official:Residents'_privacy_rights

Gwyneth Llewelyn

BillyFishCake Resident thank you. I was just going to post the same thing.

Obviously

LL cannot be falsely accused of violating any rules or laws, when it's residents that don't read the ToS and attribute to themselves rules that never existed... and then get immensely disappointed and frustrated that things are not as they would like

them to be.Although Beq's idea

has

merit on its own, and I've also voted it up because (if technically possible!) I'd love to know if a certain group chat is being relayed to the outside world or not, in practice, people can simply copy and paste the chat to their Discord group, manually, and do it "legally", even if not ethically

, and it would be very very very very hard to enforce in a court of law a claim of "violation of European/some American privacy rules". This is made even harder when taking into account that every avatar name is already

a pseudonym and there is no way for anyone to link such a name to a real person located in a physical address — exactly, in fact, what European law (and some American/Canadian/Australian law that I've briefly looked at) wants to prevent.In fact, I

could

come up with a completely fake chat log (imagine, created with ChatGPT) and post it on Discord. Since all avatar names are pseudonyms anyway, and therefore no privacy laws can be broken, all I can claim is creative freedom of expression

and consider such a chat log merely "a work of art, not related to anyone real". This would naturally make a lot

of people very

angry, but they could essentially do nothing about it.Granted, this is the purely legal view of things; from an ethical/moral perspective, such behaviour would be really obnoxious, and one might expect not to get any more Christmas cards after pulling such a stunt on their fellow residents...

Journey Bunny

Backwards compatability makes this one tricky.

I like the idea; I'll add one further 'reason it's important': Everything ever said can be trivially "looked up" when logged to Discord. A person joining a group's Discord may gain access to all the records of chat that occured when they weren't even a member of the group. People chatting in groups suddenly need to be concerned about things they've said, in what groups, with which members across forever. Essentially, saying a thing in a group that does

not

have your stalker no longer guarantees that your stalker is unable to find what you've said to that group in the future.Issues: An optional flag would plausibly need to be Off by default, and only turned on by groups that choose to invite users to feel the icy grip of paranoia. Will groups even do it? (Remember Bonnies? Thousands of cryptically named "people" regularly roamed the grid for years, popping to region center for 5 seconds and then moving to the next region...all perfectly capable of gathering tons of data. But once people saw a consistent naming scheme that openly declared "I'm a bot", the panic set it about surveillance)

So, if such a flag is Off by default, and if such a flag is optional, and if such a flag has the possibility to decrease a user's odds of staying in a group... What does no flag mean? Seeing the flag NOT set would not mean "no bot recording us here". Would we be better served just with something like a message when we open a group chat, reminding us that a recording could be happening? That'd be more accurately informational, but also would likely induce panic in groups regardless of recording.

Solution: Encrypted chat that, in some way, requires a checked-out cert to "export." Unfortunately, probably impossible with an open source viewer. And, additionally, if it's not impossible then it'd plausibly break all past (useful and good) text-based viewer agent capabilities (CSR bots, etc).

Thorny, tricky issue here :(

Salt Peppermint

I think this makes sense for private groups but not so much for public groups that anyone can join at any time

belindacarson Resident

I would vote for this, as it's a potential violation of the GDPR. (processing personal info without consent of the users.).

Gwyneth Llewelyn

belindacarson Resident what personal info are you talking about? On a chat transcript — any chat transcript —

no

personal information (i.e. name, physical address, citizen ID number or equivalent data) is "processed".You

might know that your

avatar name is actually under your

control, but that's something only you

know :) — it's not public information, and there is no way to extract that data from LL's servers in any way, and certainly not from text chat.nya Resident

just put it at the top of your groups description?

Gwyneth Llewelyn

Woolfyy Resident sorry, but no. You're wrong on both accounts :) It

would

be a problem if

, instead of the avatar names, such chat transcripts included people's real

names and

their physical addresses. But we all know that's not the case — that data is impossible to extract from LL's servers. Well, nothing's impossible, but, in twenty years, nobody hacked LL's database of names and addresses — which currently is only used for payment processing, which is very likely only used by Tilia anyway, and even Tilia might not have the full

records...Gwyneth Llewelyn

Woolfyy Resident I should have explained things better...

It's obvious that Linden Lab has

all

their user's data, and, aye, relatively recently it was even shown that Linden Lab can perfectly well figure out who someone's alts are, even if the connection is shared among different people (say, a couple where both partners use SL, each having different alts, and someone even 'borrowing' each other's computers to log in; but the same also applies to those who use some sort of public network to log in, or their campus network, whatever).The very interesting article that you link to is about

browser

fingerprinting, which is slightly

more complex for two reasons: you might not

be logged in to any service (and thus be harder to figure out what accounts you use regularly) and

while a lot

of information about your system gets 'leaked out' via the browser, not everything

does. It just requires a bit more tech to retrieve additional data and combine them all together to get a 'unique' fingerprint. But it's a bit tougher!Linden Lab, by contrast, has no such issues. Everything you do on Second Life — and that includes

all

the websites run by Linden Lab — require logging in, so LL knows very well who you are, from the moment you register your account (on the Web), to the moment you download the official viewer for your platform (you can get it from only one place), to your very first login when you accept the ToS to proceed. (Later on, when buying L$ through Tilia, they will get even more data, of course.)However, the SL Viewer is

not

a 'web browser'. It's an application designed by Linden Lab, and they can grab whatever information they wish

. Part of that information is even shown on the "About Second Life" panel. You can even see on the logs how Linden Lab sends an almost continuous stream of telemetry data, so they know exactly

what you're doing.And, of course, they can grab the unique serial number of your computer(s), plus the IP address, since those will be available programmatically by any native application running on your computer — their digital fingerprinting, so to speak, is far easier to capture, since they, Linden Lab, have full control over both the client and the server-side of things.

While it might be argued that LL does

not

have access to all the data (namely, billing data), whatever they don't know, Tilia knows (at least partially), and, since Tilia is a fully-owned subsidiary of Linden Lab. Both placed together, therefore, are — seen as a whole — able to map their users to whatever digital fingerprints they may have captured before.What

I

argue is that there is nothing inherently wrong with that.Banks

also have access to all my data, including credit card data, or how often I log in to their homebanking system.The same goes for insurance agencies. They even know my medical history (after all,

they

pay my bills).And the list goes on. All these companies know my life to excruciatingly detail. But — and this is the important thing — they

assure

me that they're not

going to sell my

data to anybody else, and that, internally, they will use my

data merely to provide their services to me

.This is absolutely and perfectly legal. If any of these organisations with

all my data

suddenly starts selling it to third parties without my explicit written consent

then they are in trouble (well, in theory at least). They're liable to lawsuits for failing to adequately protecting my data from "leaking" from their services. In other words: sure, they're legally allowed

to store all that data about me, so long as a few simple rules are kept — namely, my right to privacy; my right to forbid them to sell or even publish my data to anyone else without my content; my right to demand that data to be deleted (at least that's true in Europe); and so forth. There are agencies which ensure that these organisations "play fair", and, if they don't, there are always the courts.Now... repeating myself once again, I'm

not

a legal expert, but

... it seems to me, by reading LL's ToS and related documentation, that there is sufficient reason to believe that LL does

abide by the above privacy laws, and actually even goes a bit further than that, by storing personal information (name, address, credit card number) on a separate company

, in a way that not even LL employees know my name (unless I tell them so). Tilia, by contrast, has not the slightest idea of what my avatar does

with the L$ I receive; I'm not even sure if Tilia even gets to know my avatar name, they might just have an internal ID (not

my avatar ID!) for clearing purposes, and, ordinarily, employees from either company cannot see the records of each. Obviously, in case the authorities demand LL & Tilia to release that data, it can

be produced, but that's essentially the only moment when data can

be correlated.TL;DR?

AFAIK, LL/Tilia can retrieve all the data they can from their own users, so long as:- they never ever publish that data, nor sell/give it to third parties;

- they use it strictly for the purposes of providing us with the services we've agreed to pay them for;

- they have established reasonable systems in place to prevent such data from being accessed by others;

- when combined, they only use that data for statistical purposes (using only anonymous/pseudonymous data — at least in Europe);

- in Europe: they allow us to request the deletion of all data pertaining to us.

(Note that on the last point, there might be an exception related to content we created in SL and licensed it to others, i.e. in SL terms: we are the authors of an item and sold it to other residents. In that case, "deleting our data" might

not

mean that the object disappears — after all, the current owner or licensee also

has the right to enjoy content they've acquired legitimately! — but merely that our name disappears as the content creator, e.g. the object shows "unknown" at the creator.)Gwyneth Llewelyn

Woolfyy Resident well, I totally agree with LL being audited on such points by an external company — even if it's just done by a local (i.e., Californian) company, that should go a long way towards properly dealing with any major privacy issues that may arise (assuming they follow such recommendations, obviously). It also will mitigate the damages of litigation — they can always point to the auditors and hold them responsible (or sue them!) if their recommendations weren't enough to satisfy privacy issues.

Then again, I still insist that one thing is getting statistics about avatar names, UUIDs, even what one avatar is wearing (as many bots are fond to figure out), and, naturally, where the avatar has been spending time and with whom (other avatars, that is). These bits of information are all possible to extract without violating ToS in any way, and reasonably so, because in none of those scenarios you can trace such information back to the

real person

who did all those things.I believe that what you're arguing is that whatever your

avatar

does is trespassing on the avatar's

privacy issues, so to speak; in other words, in the real world, I wouldn't want people to know what my name is and what is my favourite brand of bras or panties. Google/Amazon/Facebook/Apple/etc. might

know all of that — and potentially much more! — and that's why they get routinely fined for violating privacy laws, especially when they sell such data to third parties (even if anonymised), which is what at least the first three do (Apple is fond of claiming otherwise regarding the data they

collect, and, so far, they've avoided being caught... innocent until proven guilty, right?).While it's possible that one might argue that tracking your avatar, and your avatar's habits, reveals a lot about who

you

are as a real person, and therefore not even our avatars should be victims of illicit tracking by third parties, well, personally I'd argue that this is something one would have to prove in a court of law, but I can imagine that, in some jurisdictions, it would be a very, very difficult case.On a civil lawsuit, one would have to be able to prove with overwhelming documentation how one incurred in some sort of personal financial loss for some reason related to how one's avatar has been tracked. Not easy.

On a

criminal

lawsuit, it would have to be argued that LL maliciously and deliberately provided inadequate privacy protections which allowed others to exploit the system — using its publicly available services and tools — in order to extract information about oneself that can be inferred

through tracking of one's avatar. There might

be a case for that (I won't pretend that a good lawyer might not come up with a good narrative explaining exactly how such things can happen), especially if one throws in things like psychological harm (attested by experts) directly related to one's avatar private information having been revealed (publicly or otherwise). It would be a difficult case, sure; but proving LL's malicious intent in allowing this to happen, and prove it beyond any shadow of doubt...? Personally, I don't see that happening in a court of (criminal) law.Granted, if LL got audited by privacy experts, followed their recommendations, and then applied for some sort of certification (maybe there is something like that in Californian law, I don't know), they would easily defeat any such potential claims — or, rather, they might feel sorry for the resident, of course, and even allow some form of compensation, but LL would offer proof enough of good faith in handling private data as they do, and thus not liable to be indicted for any crimes regarding negligence or placing inadequate measures in place to protect their users' privacy. I cannot see it going much further than that.

Now, you also mention something completely different, and here I happen to fully agree with you :) The easiest thing in the world, for a malicious party, is to use a combination of a) a hacked viewer that disregards ToS; b) media on a prim; c) streaming services; d) a simple packet sniffer or even just a SL proxy on the side of the hacker, and using all those techniques to figure out who the person behind the avatar ultimately is. At the very least they will be able to retrieve the user's IP address (and thus get a good idea of where in the world the person behind the avatar lives. The ability to correlate one's avatar to one's RL location is quite possible, and, from such data, I totally agree that you can extract from SL far more information than anyone is willing to reveal about themselves.

It also doesn't help that the whole communication protocol is unencrypted, which makes a hacker's work far easier — by leaps and bounds.

However, I remain unconvinced that all the above directly implicates that LL didn't do a good job of preserving the privacy of people's real life identities.

In fact, a _long_, long time ago (and laws tend to change after almost two decades!), I was involved in a very delicate project using SL. Around here, when there are cases of family violence, the children are usually taken care of by a special court. They're placed in undisclosed locations, and their names are not even fully known by the caretakers (just the first name, or a nickname). While family courts are usually swift in passing sentences, it's also true that, in some extreme cases, the court might decide that it's too dangerous for the children to return to their homes, even if one (or worse, both!) of the parents have to go to prison. Anyway, the point is, those kids are in perpetual danger for some reason, and so they must keep themselves anonymous to the 'outside world', so that they don't get caught by anyone wishing to do them harm. It's sad, but it happens.

Kids, however, want to socialise, and they also need to continue their school education — in safety. This usually requires a complex set of clearances, and an acquaintance of mine ran a non-profit association to put children in contact with IT. He was cleared to visit the children in person and organise on each safehouse (they were spread across a few) a place where they could have a few computers (usually sponsored by a charity, which my acquaintance would contact). He would go from safehouse to safehouse to give his classes, but he was always sad when he had to leave and go to the next one, because that meant leaving a handful of others behind. He wondered what he could do to get them somehow in touch with each other. By then, the solution he came up with were chatrooms and the early messenger systems. That would also allow him, when at the office, to keep in touch with all children on all homes, so he could also schedule special maintenance calls when the children told them that a computer had broken down or something. And, of course, the children could chat with each other, across safehouses, even if they never personally met.

Well, about that time, we had already been playing around with SL, and the Teen Grid was a reality (it was still in its early stages), and my acquaintance thought that SL, due to its collaborative nature, the ability to learn to script, and the way it allowed participants to be physically isolated from each other, yet work together in the same virtual space — all that was rather exciting for him. He started therefore to ask for all the clearances and permissions, and that naturally also involved LL. I'm not sure if this is still the case, but there was an option to create avatars strictly bound to a single estate, from which they could never teleport out. This was the solution that finally everybody agreed to be the best and safest one. LL was made aware of the very special circumstances of these children, and allowed them to create accounts without their full legal name — they would be linked to the non-profit, so if by any chance something happened, LL would know whom to contact, in a similar wway to how parents are responsible for their underage teens roaming SL (and the Teen Grid back then).

All this was new territory back then — nobody had ever made such an experiment before, and "virtual worlds" were hardly things that we read about on the mainstream media. The overall clearance process was neither trivial nor sloppy; a lot of checking had to be in place, a lot of assurances had to be mutually given, and this would even be demanded by the sponsors — who would not want to contribute any funding for something that could be seen as dangerous for the children.

SL, however, is all about anonymity — no matter if there are ways to hack the system or not. The point is that, from the perspective of the legal documentation (and not the potential technical flaws), LL does, indeed, guarantee a level of privacy that doesn't exist anywhere else (or at least that was the case back then). Strictly speaking at the purely legal framework, there were even some boundaries that could be crossed — in absolute safety! — which would otherwise be impossible in the real world. Here is a very simple example: as said, very few people had clearance to actually be in physical proximity of the children, even if it was just to gisxxve them classes. The risk was simply too high. However, via SL, such issues didn't exist. It was safe for them if _I_ taught them classes about SL, in SL, so long as we remained at this special island from which they couldn't teleport away, and which was not even shown on the map, much less having permissions for "strangers" to teleport to. The only people who could enter that space were pre-approved by my acquaintance, and that was restricted to those, such as myself, who actually worked with him on the non-profit. Thus, although I technically was _not_ cleared to even learn the children's names, much less being physically near them, nor even have the slightest idea where the safehouses were located — all that information was kept in absolute secrecy and taken _very_ seriously! — it was fine for me to go into SL and chat with the kids and do things together.

There were, obviously, a few rules. The kids weren't allowed to tell each other anything about their families — by now, they were used to that. They were also instructed not to tell _me_ anything, of course. But I was also not supposed to tell them who _I_ was in real life (they only knew I worked for/with the non-profit). This, perhaps surprisingly (at least, it was for me), was not only for the children's sake, but also to protect _me_. It was not unusual that caretakers with full clearance to be in touch with the kids were often victims of acts of violence, perpetuated either by a dangerous parent, or some relatives or close friends, who sincerely believed that the courts were wrong in keeping the children away from their daddy or mommy, and wanted to find out where the children were kept. As such, they would try to find a way to get in touch with someone who knew where the kids were. It was not by coincidence that my acquaintance had the height and overall volume of a Sumo wrestler (although he was absolutely harmless, of course — he just didn't _look_ harmless!). He'd never be an "easy pray" for those wanting to extract any information from _him_. That was certainly not _my_ case, or the case of his wife (who also worked with him in SL — at the end, she even learned to do some clothing for the kids, and enjoyed herself as much as the kids themselves — but I digress).

Anyway, long story, but there is a point here that I just wanted to underline. When I talk about SL being a "safe" environment in the sense that LL takes privacy issues very seriously, this is what I'm talking about: the level of privacy and safety that can be guaranteed by LL in their Second Life Grid was enough to get judges, lawyers, charities, non-profits, and their caretakers to consider it "safe", in a scenario which was _not_ hypothetical (unfortunately so!), but a quite real one. There could be no "leaks" about the children's identity — not even the least clue about them could be made public. The level of control over the safety of the children was obsessive and, frankly, a bit disturbing — but how could it be different, if their lives were at stake? (and they _were_ separated from their parents _because_ their lives were in risk — again, not hypothetically, but as a consequence of judgements made by the court regarding their violent parent(s)).

The technical details were actually secondary. The judges did not want "proof" that SL was impossible to hack. Similarly, the courts certainly knew that there were computer viruses that could be spread via the Internet, infect the children's computers, and eventually reveal their IP addresses (and thus the approximate location of the safehouses where they lived). Obviously, those things existed (and still exist). Obviously, _if_ anyone _knew_ that the children used SL in order to be in a "safe" environment, it's conceivable that some of the more dangerous parents (many had criminal records, and were found guilty of criminal association) could hire a clever hacker to specifically target the SL Viewer and therefore compromise security. There are a million possibilities on _how_ the children's identity could be (at least partially) revealed, using clever ruses. I remember spending nights awake thinking about the most terrible scenarios, such as the possibility of someone from the non-profit buying a scripted item, bringing it to the island, and giving it to the children, never suspecting that the item in fact carried a Trojan — imagine one of those radios with the ability to change the music stream, from which you can extract the resident's IP address very easily. Such an object would seem absolutely harmless, and even the stream could _sound_ legitimate enough — the malicious agents would only need to take a look at the streaming server's logs, after all, the rest could be "genuine", in order not to raise any suspicions.

You might wonder if there were _never_ any such attacks, or any risk of discovery. Well, I can only say that, if there were any, I was not cleared to know about them :) unless, of course, some corrective action had to be taken on my part. There

was

a slight issue at some point because a few of the children posted their phone numbers on their public profiles (all this predates smartphones, BTW). They did that in order for kids of the _other_ safehouses be able to get in touch with them. There was really no risk of those profiles having been seen by anyone in SL — those "bound avatars", at that time at least, could have these things quite limited, exactly like on the Teen Grid — nothing could flow from the Teen Grid to the Main Grid (and vice-versa), even though, for all purposes, both were technically the _same_ grid, hosted on the same server(s) and database(s). There was simply a question of permissions which prevented people to see what they weren't allowed to see.The only issue was that in this specific scenario, the kids were instructed

never

to give away their phone numbers, not even to the other kids

, and they had subverted the system (at least that's what I understood back then) by using their SL profiles, which were visible to _all_ children, to announce their phone numbers... this was quickly fixed, of course, and the children were severely reprimanded for their misbehaviour. But that's all there is to it — no harm was done.But it could. After all, kids are curious, and want to see how much they can bend the rules, testing their limits all the time. This was sort of expected. But it was also expected that, at the very least, LL had in place some special "emergency response team" to deal with such cases, and that they would do their best to avoid such situations. In other words: LL's policy has always been one of privacy and safety of one's personal identity — no matter what.

Whew. That said, and even with the potential (technical) risks, and considering that many laws making security even stricter in the past 15+ years, I still remain unconvinced that LL, somehow, runs an operation that violates the most basic privacy issue — which is to reveal, in any way or format, what RL information they have about their users, inadvertently or maliciously.

VriSeriphim Resident

At the very least, a prominent warning that a scripted agent (aka bot) is a member of a group.

Of course, it should be noted that any agent could be recording the chat.

Load More

→